Part A: A Practical Introduction to Text Classification

Multi-Topic Text Classification with Various Deep Learning Models

Author: Murat Karakaya

Date created….. 17 09 2021

Date published… 11 03 2022

Last modified…. 12 03 2022

Description: This is the Part A of the tutorial series that covers all the phases of text classification:

- Exploratory Data Analysis (EDA),

- Text preprocessing

- TF Data Pipeline

- Keras TextVectorization preprocessing layer

- Multi-class (multi-topic) text classification

- Deep Learning model design & end-to-end model implementation

- Performance evaluation & metrics

- Generating classification report

- Hyper-parameter tuning

- etc.

We will design various Deep Learning models by using

- the Keras Embedding layer,

- Convolutional (Conv1D) layer,

- Recurrent (LSTM) layer,

- Transformer Encoder block, and

- pre-trained transformer (BERT).

We will cover all the topics related to solving Multi-Class Text Classification problems with sample implementations in Python / TensorFlow / Keras environment.

We will use a Kaggle Dataset in which there are 32 topics and more than 400K total reviews.

If you would like to learn more about Deep Learning with practical coding examples,

- Please subscribe to the Murat Karakaya Akademi YouTube Channel or

- Follow my blog on muratkarakaya.net

- Do not forget to turn on notifications so that you will be notified when new parts are uploaded.

PARTS

In this tutorial series, there are several parts to cover Text Classification with various Deep Learning Models topics. You can access all the parts from this index page.

PART A: A PRACTICAL INTRODUCTION TO TEXT CLASSIFICATION

What is Text Classification?

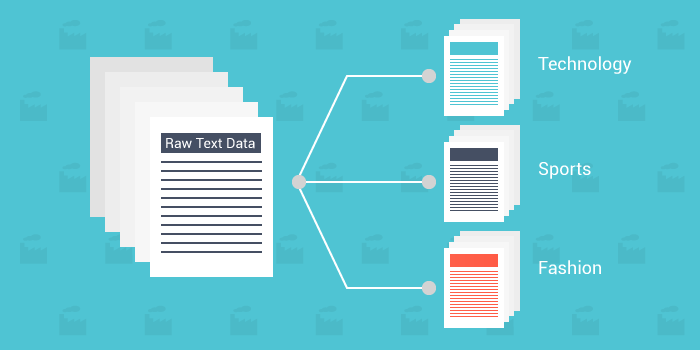

Text classification is a machine learning technique that assigns a set of predefined categories (labels/classes/topics) to open-ended text.

The categories depend on the selected dataset and can cover arbitrary subjects. Therefore, text classifiers can be used to organize, structure, and categorize any kind of text.

Types of Classifications:

In general, there are 3 types of classification:

- Binary classification

- Multi-class classification

- Multi-label classification

Binary classification is the task of classifying the samples into two groups: is it apple or orange?.

Multiclass classification makes the assumption that each sample is assigned to one and only one label, but there could be more than 2 classes: a fruit can be either an apple, an orange, or a pear but not both or all three at the same time.

Multilabel classification assigns to each sample a set of target labels: in a fruit salad, the ingredients would be apple, orange, and pear.

You can learn the details of classification and how to design Deep Learning models for these classification types in the following playlists:

- Classification with Keras & Tensorflow

- Keras ve Tensorflow ile Sınıflandırma (in Turkish)

In this tutorial, we will deal with a multi-class text classification problem.

How and Where can we use Text Classifiers?

Text classifiers can be used to organize, structure, and categorize pretty much any kind of text — from documents, medical studies, and files, and all over the web. For example,

- news articles can be organized by topics;

- support tickets can be organized by urgency;

- chat conversations can be organized by language;

- brand mentions can be organized by sentiment;

- and so on.

What are the Automatic Text Classification Approaches?

There are many approaches to automatic text classification such as:

- Rule-based systems

- Machine learning-based systems

- Deep learning-based systems

- Hybrid systems

In this tutorial series, we will focus on Deep learning-based systems.

These approaches can be used in supervised or unsupervised learning settings.

- Supervised Learning: Common approaches use supervised learning to classify texts. This conventional text classification approaches usually requires a large amount of labeled training data.

- Unsupervised Learning: In practice, however, an annotated text dataset for training state-of-the-art classification algorithms is often unavailable. The annotation (labeling) of data usually involves a lot of manual effort and high expenses. Therefore, unsupervised approaches offer the opportunity to run low-cost text classification for the unlabeled dataset.

In this tutorial series, we will focus on the Supervised Learning methods since we have a labeled dataset.

What Types of Deep Learning (DL) models are used in Text Classification?

First of all, in DL, we encode the text by using an important technique called embedding.

You can encode the text by applying the embedding to different granularities of the text:

- Word Embedding

- Sentence Embedding

- Paragraf Embedding, etc.

Embedding is basically converting text into a numeric representation (mostly to a high-dimensional dense vector) as shown below.

Frequently used Word Embedding methods are:

- One Hot Encoding,

- TF-IDF,

- Word2Vec,

- FastText,

- Pre-trained Language Models as an Embedding layer

- Train an Embedding layer

One of these techniques (in some cases several) is preferred and used according to the status, size, and purpose of processing the data.

You can learn the details of embedding and how Deep Learning models apply it for text classification in the following playlists:

- Word Embedding in Keras

- Word Embedding Hakkında Herşey (in Turkish)

A basic DL model can use an embedding layer as an initial layer and a couple of dense layers for classification, as we will see in Part D.

In Part E, F, G, and H, we will train an embedding layer during the model training.

In Part I, we will use a pre-trained embedding layer from a famous transformer model: Bert.

What are the Common Deep Learning Architectures for Text Classification?

We can summarize the architectures of the DL models used in text classification as follows:

- Embedding + Dense Layers (Part E)

- Embedding + Convolutional Layers (Part F)

- Embedding + Recurrent Layers (Part G)

- Embedding + Transformer Encoder blocks (Part H)

- etc.

We will cover all the above methods in this series.

What is Text Preprocessing?

Text preprocessing is traditionally an important step for natural language processing (NLP) tasks. It transforms text into a more suitable form so that Machine Learning or Deep Learning algorithms can perform better.

The main phases of Text preprocessing:

- Noise Removal (cleaning) — Removing unnecessary characters and formatting

- Tokenization — break multi-word strings into smaller components

- Normalization — a catch-all term for processing data; this includes stemming and lemmatization

Some of the common Noise Removal (cleaning) steps are:

- Removal of Punctuations

- Removal of Frequent words

- Removal of Rare words

- Removal of emojis

- Removal of emoticons

- Conversion of emoticons to words

- Conversion of emojis to words

- Removal of URLs

- Removal of HTML tags

- Chat words conversion

- Spelling correction

Tokenization is about splitting strings of text into smaller pieces, or “tokens”. Paragraphs can be tokenized into sentences and sentences can be tokenized into words.

Noise Removal and Tokenization are staples of almost all text pre-processing pipelines. However, some data may require further processing through text normalization. Some of the common normalization steps are:

- Upper or lowercasing

- Stopword removal

- Stemming — bluntly removing prefixes and suffixes from a word

- Lemmatization — replacing a single-word token with its root

In this tutorial, we will use the tf.keras.layers.TextVectorization layer which is one of the Keras Preprocessing layers.

Summary

In this part,

- we have introduced the Text Classification with Deep Learning tutorial series,

- we have covered the fundamentals of the text classification and related concepts in the Deep Learning approach.

In the next part, we will explore the sample dataset.

Do you have any questions or comments? Please share them in the comment section.

Thank you for your attention!