Part F: Text Classification with a Convolutional (Conv1D) Layer in a Feed-Forward Network

Author: Murat Karakaya

Date created….. 17 09 2021

Date published… 11 03 2022

Last modified…. 29 12 2022

Description: This is the Part F of the tutorial series “Multi-Topic Text Classification with Various Deep Learning Models” which covers all the phases of multi-class text classification:

- Exploratory Data Analysis (EDA),

- Text preprocessing

- TF Data Pipeline

- Keras TextVectorization preprocessing layer

- Multi-class (multi-topic) text classification

- Deep Learning model design & end-to-end model implementation

- Performance evaluation & metrics

- Generating classification report

- Hyper-parameter tuning

- etc.

We will design various Deep Learning models by using

- Keras Embedding layer,

- Convolutional (Conv1D) layer,

- Recurrent (LSTM) layer,

- Transformer Encoder block, and

- Pre-trained transformer (BERT).

We will cover all the topics related to solving Multi-Class Text Classification problems with sample implementations in Python / TensorFlow / Keras environment.

We will use a Kaggle Dataset in which there are 32 topics and more than 400K total reviews.

If you would like to learn more about Deep Learning with practical coding examples,

- Please subscribe to the Murat Karakaya Akademi YouTube Channel or

- Follow my blog on muratkarakaya.net

- Do not forget to turn on notifications so that you will be notified when new parts are uploaded.

You can access all the codes, videos, and posts of this tutorial series from the links below.

Accessible on:

In this tutorial series, there are several parts to cover Text Classification with various Deep Learning Models topics. You can access all the parts from this index page.

Photo by Josh Eckstein on Unsplash

PART F: TEXT CLASSIFICATION WITH A CONVOLUTIONAL (CONV1D) LAYER

In this part, we will use a Keras Conv1D layer in a Feed Forward Network (FFN).

If you are not familiar with the Keras Conv1D layer or the Convolution concept, you can check the following Murat Karakaya Akademi YouTube playlists and videos:

English:

- tf.keras.layers: Understand & Use

- Convolution in Deep Learning: Explained & Implemented with Python Part A Part B Part C

- Conv1D: Understanding tf.keras.layers

- Conv1D: Keras 1D Convolution Model For Regression (Boston House Prices Prediction)

Turkish

- tf.keras.layers: Anla ve Kullan

- Derin Öğrenmede Görüntü İşleme: Matriks Çarpımından Keras Conv2D Katmanına kadar Python ile Kodlama

If you are not familiar with the classification with Deep Learning topic, you can find the 5-part tutorials in the below Murat Karakaya Akademi YouTube playlists:

- How to solve Classification Problems in Deep Learning with Tensorflow & Keras

- Derin Öğrenmede Sınıflandırma Çeşitleri Nelerdir? Keras ve TensorFlow ile Nasıl Yapılmalı? (in Turkish)

Let’s build a model with a Conv1D layer

I would like to kindly remind you of the important parameters in the Keras Conv1D layer briefly.

tf.keras.layers.Conv1D(

filters,

kernel_size,

padding="valid",

activation=None

)- filters: Integer, the dimensionality of the output space (i.e. the number of output filters in the convolution).

- kernel_size: An integer or tuple/list of a single integer, specifying the length of the 1D convolution window.

- padding: One of “valid”, “same” or “causal” (case-insensitive).

- “valid” means no padding.

- “same” results in padding with zeros evenly to the left/right or up/down of the input such that the output has the same height/width dimension as the input.

- “causal” results in causal (dilated) convolutions, e.g. output[t] does not depend on input[t+1:]. Useful when modeling temporal data where the model should not violate the temporal order.

- activation: Activation function to use. If you don’t specify anything, no activation is applied (see keras.activations).

In our model, I will use 3 Conv1D layers and set the following values for their parameters:

- filters: 32. That is, I will use 256 filters in the first

Conv1Dlayer, and 128 filters in the second and the lastConv1Dlayers. - kernel_size: The first

Conv1Dlayer will take care of 7 consecutive inputs, the second one will make use of 5 consecutive inputs, and the last one will take care of 3 consecutive inputs. - padding: I will use the “

same" value for padding so that after convolution the size of the output tensor will be the same as the input vector. - activation: I will set the activation parameter to ‘relu’ as we use ‘relu’ activation function for intermediate layers usually.

embed_dim = 16 # Embedding size for each token

feed_forward_dim = 64 # Hidden layer size in feed forward network

def create_model_Conv1D():

inputs_tokens = layers.Input(shape=(max_len,), dtype=tf.int32)

embedding_layer = layers.Embedding(input_dim=vocab_size,

output_dim=embed_dim,

input_length=max_len)

x = embedding_layer(inputs_tokens)

x = layers.Conv1D(filters=256, kernel_size=7,

padding='same', activation='relu')(x)

#x = layers.MaxPooling1D(pool_size=2)(x)

#x = layers.Dropout(0.5)(x)

x = layers.Conv1D(filters=128, kernel_size=5,

padding='same', activation='relu')(x)

x = layers.Conv1D(filters=128, kernel_size=3,

padding='same', activation='relu')(x)

x = layers.MaxPooling1D(pool_size=2)(x)

#x = layers.Dropout(0.5)(x)

x = layers.Flatten()(x)

x = layers.Dense(feed_forward_dim, activation='sigmoid')(x)

x = layers.Dropout(0.5)(x)

outputs = layers.Dense(number_of_categories)(x)

model = keras.Model(inputs=inputs_tokens,

outputs=outputs, name='model_Conv1D')

loss_fn = tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True)

metric_fn = tf.keras.metrics.SparseCategoricalAccuracy()

model.compile(optimizer="adam", loss=loss_fn, metrics=metric_fn)

return model

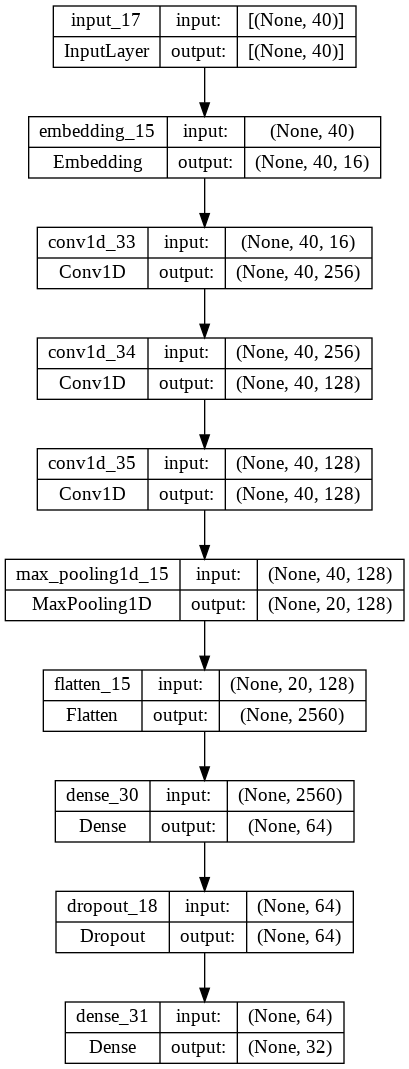

model_Conv1D=create_model_Conv1D()time: 72.4 ms (started: 2022-12-21 14:36:34 +00:00)model_Conv1D.summary()Model: "model_Conv1D"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_17 (InputLayer) [(None, 40)] 0

embedding_15 (Embedding) (None, 40, 16) 1600000

conv1d_33 (Conv1D) (None, 40, 256) 28928

conv1d_34 (Conv1D) (None, 40, 128) 163968

conv1d_35 (Conv1D) (None, 40, 128) 49280

max_pooling1d_15 (MaxPoolin (None, 20, 128) 0

g1D)

flatten_15 (Flatten) (None, 2560) 0

dense_30 (Dense) (None, 64) 163904

dropout_18 (Dropout) (None, 64) 0

dense_31 (Dense) (None, 32) 2080

=================================================================

Total params: 2,008,160

Trainable params: 2,008,160

Non-trainable params: 0

_________________________________________________________________

time: 39.4 ms (started: 2022-12-21 14:36:39 +00:00)

Remember that in Part D, the input text (review) is encoded into 40 tokens (integers). Therefore, in the above summary, you see this first line:

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_17 (InputLayer) [(None, 40)] 0Here None refers to the batch size.

After getting the input, we project it to a new representation using the Embedding layer with a dimension 16, as seen below:

embedding_15 (Embedding) (None, 40, 16) 1600000Thus, the output dimension of the Embedding layer is (BatchSize=Any, input_size=40, embedding_dimension=40). Basically, we convert 40 integer numbers (tokens) to a new representation such that each integer (token) is now represented by 16 float numbers. Note that during each training epoch, the model will learn how to represent these integers with proper (better) conversion as a part of backpropagation.

With the new representation, we can now apply 1-dimensional convolutional transformation. The output dimension of the conv1d layer will be

conv1d_33 (Conv1D) (None, 40, 256) 28928If you check the model, the strides parameter is not provided. Therefore, 1 is assigned as the default value to the strides parameter. Another important parameter padding is set same. Because of these parameter values, the output dimension of the conv1d layer is (BatchSize=Any, input_size=40, convolved_value (number_of_filters)=256). If you choose other values for the strides and padding parameters you will end up with a different convolved_value. Also, note that the kernel_size is 7.

With the first conv1d layer, we convert the representation of a token from 16 float numbers to a new representation such that each token is now represented by 266 float numbers. Note that during each training epoch, the model will learn how to fill in 256 filters with kernel_size=7 so that it can end up with a better conversion as a part of backpropagation.

The next layer is another conv1d layer is (BatchSize=Any, input_size=40, colvolved_value (number_of_filters)=128). Also, note that this time kernel_size is 5.

The last conv1d layer is (BatchSize=Any, input_size=40, colvolved_value (number_of_filters)=128)with a kernel_size is 3.

As a side note: here, I propose you a sample model with conv1d layers. You can try other architectures. For example, you can add drop layer in between conv1d layers if you think that your model begins to memorize (overfit) the data. Or you can decrease the number of conv1d layers in the model for the same reason.

The kernel_size and the number_of_filters can be guessed by experience. As a rule of thumb, you can prefer bigger numbers for the first layer, and smaller numbers for the next layers as in the above model. Essentially, you need to try and observe how your model behaves with your data.

For downsize the output dimension, you can use max_pooling1d and flatten layers after conv1d layers.

conv1d_35 (Conv1D) (None, 40, 128) 49280

max_pooling1d_15 (MaxPoolin (None, 20, 128) 0

flatten_15 (Flatten) (None, 2560) 0Since the pool_size is set 2 for the max_pooling1d layer, the number of tokens is downsized to half (from 40 to 20).

The flatten layer just converts the (None, 20, 128) input to a flat (None, 20*128= 2560) output.

After then, you can apply other dense layers for classification. You can also review the below chart to observe how we transform the data from one representation (shape) to another by using different layers.

tf.keras.utils.plot_model(model_Conv1D,show_shapes=True)

time: 310 ms (started: 2022-12-21 14:36:46 +00:00)Train

As you know, in Part D, we have the train, validation, and test datasets ready to input any ML/DL models. Now, we will use the train and validation datasets to train the model.

history=model_Conv1D.fit(train_ds, validation_data=val_ds ,verbose=1, epochs=25)Epoch 1/25

237/237 [==============================] - 2s 6ms/step - loss: 3.4778 - sparse_categorical_accuracy: 0.0290 - val_loss: 3.4652 - val_sparse_categorical_accuracy: 0.0325

Epoch 2/25

237/237 [==============================] - 1s 5ms/step - loss: 3.4645 - sparse_categorical_accuracy: 0.0322 - val_loss: 3.4015 - val_sparse_categorical_accuracy: 0.0529

Epoch 3/25

237/237 [==============================] - 1s 5ms/step - loss: 3.1921 - sparse_categorical_accuracy: 0.0775 - val_loss: 2.9360 - val_sparse_categorical_accuracy: 0.1298

Epoch 4/25

237/237 [==============================] - 1s 5ms/step - loss: 2.7010 - sparse_categorical_accuracy: 0.1735 - val_loss: 2.4498 - val_sparse_categorical_accuracy: 0.2734

Epoch 5/25

237/237 [==============================] - 1s 5ms/step - loss: 2.2243 - sparse_categorical_accuracy: 0.2993 - val_loss: 2.0956 - val_sparse_categorical_accuracy: 0.3846

Epoch 6/25

237/237 [==============================] - 1s 5ms/step - loss: 1.8445 - sparse_categorical_accuracy: 0.4162 - val_loss: 1.8334 - val_sparse_categorical_accuracy: 0.4808

Epoch 7/25

237/237 [==============================] - 1s 5ms/step - loss: 1.5595 - sparse_categorical_accuracy: 0.5178 - val_loss: 1.6416 - val_sparse_categorical_accuracy: 0.5727

Epoch 8/25

237/237 [==============================] - 1s 5ms/step - loss: 1.3285 - sparse_categorical_accuracy: 0.6030 - val_loss: 1.4775 - val_sparse_categorical_accuracy: 0.6304

Epoch 9/25

237/237 [==============================] - 1s 5ms/step - loss: 1.1308 - sparse_categorical_accuracy: 0.6627 - val_loss: 1.3818 - val_sparse_categorical_accuracy: 0.6496

Epoch 10/25

237/237 [==============================] - 1s 5ms/step - loss: 0.9351 - sparse_categorical_accuracy: 0.7209 - val_loss: 1.2434 - val_sparse_categorical_accuracy: 0.7109

Epoch 11/25

237/237 [==============================] - 1s 5ms/step - loss: 0.7344 - sparse_categorical_accuracy: 0.7886 - val_loss: 1.1474 - val_sparse_categorical_accuracy: 0.7518

Epoch 12/25

237/237 [==============================] - 1s 5ms/step - loss: 0.5596 - sparse_categorical_accuracy: 0.8510 - val_loss: 1.0674 - val_sparse_categorical_accuracy: 0.7812

Epoch 13/25

237/237 [==============================] - 1s 5ms/step - loss: 0.4449 - sparse_categorical_accuracy: 0.8862 - val_loss: 1.0881 - val_sparse_categorical_accuracy: 0.7849

Epoch 14/25

237/237 [==============================] - 1s 5ms/step - loss: 0.3573 - sparse_categorical_accuracy: 0.9133 - val_loss: 0.9897 - val_sparse_categorical_accuracy: 0.8047

Epoch 15/25

237/237 [==============================] - 1s 5ms/step - loss: 0.2901 - sparse_categorical_accuracy: 0.9347 - val_loss: 0.9854 - val_sparse_categorical_accuracy: 0.8101

Epoch 16/25

237/237 [==============================] - 1s 5ms/step - loss: 0.2563 - sparse_categorical_accuracy: 0.9446 - val_loss: 0.9927 - val_sparse_categorical_accuracy: 0.8173

Epoch 17/25

237/237 [==============================] - 1s 5ms/step - loss: 0.2221 - sparse_categorical_accuracy: 0.9501 - val_loss: 1.0378 - val_sparse_categorical_accuracy: 0.8137

Epoch 18/25

237/237 [==============================] - 1s 5ms/step - loss: 0.1974 - sparse_categorical_accuracy: 0.9566 - val_loss: 1.0300 - val_sparse_categorical_accuracy: 0.8155

Epoch 19/25

237/237 [==============================] - 1s 5ms/step - loss: 0.1829 - sparse_categorical_accuracy: 0.9604 - val_loss: 1.0824 - val_sparse_categorical_accuracy: 0.8119

Epoch 20/25

237/237 [==============================] - 1s 5ms/step - loss: 0.1643 - sparse_categorical_accuracy: 0.9616 - val_loss: 1.0970 - val_sparse_categorical_accuracy: 0.8191

Epoch 21/25

237/237 [==============================] - 1s 5ms/step - loss: 0.1464 - sparse_categorical_accuracy: 0.9682 - val_loss: 1.1232 - val_sparse_categorical_accuracy: 0.8233

Epoch 22/25

237/237 [==============================] - 1s 5ms/step - loss: 0.1346 - sparse_categorical_accuracy: 0.9703 - val_loss: 1.0852 - val_sparse_categorical_accuracy: 0.8311

Epoch 23/25

237/237 [==============================] - 1s 5ms/step - loss: 0.1339 - sparse_categorical_accuracy: 0.9712 - val_loss: 1.1065 - val_sparse_categorical_accuracy: 0.8209

Epoch 24/25

237/237 [==============================] - 1s 5ms/step - loss: 0.1257 - sparse_categorical_accuracy: 0.9738 - val_loss: 1.1412 - val_sparse_categorical_accuracy: 0.8185

Epoch 25/25

237/237 [==============================] - 1s 5ms/step - loss: 0.1130 - sparse_categorical_accuracy: 0.9735 - val_loss: 1.1779 - val_sparse_categorical_accuracy: 0.8173

time: 33.1 s (started: 2022-12-21 14:36:47 +00:00)

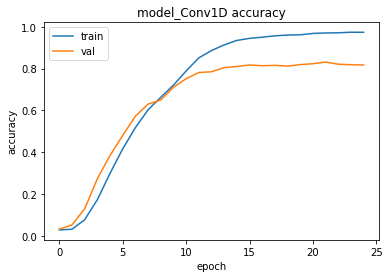

Let’s observe the accuracy and the loss values during the training:

plt.plot(history.history['sparse_categorical_accuracy'])

plt.plot(history.history['val_sparse_categorical_accuracy'])

plt.title('model_Conv1D accuracy')

plt.ylabel('accuracy')

plt.xlabel('epoch')

plt.legend(['train', 'val'], loc='upper left')

plt.show()

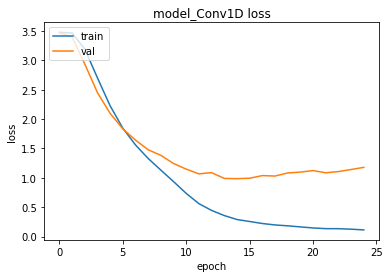

time: 125 ms (started: 2022-12-21 14:37:20 +00:00)plt.plot(history.history['loss'])

plt.plot(history.history['val_loss'])

plt.title('model_Conv1D loss')

plt.ylabel('loss')

plt.xlabel('epoch')

plt.legend(['train', 'val'], loc='upper left')

plt.show()

time: 128 ms (started: 2022-12-21 14:37:20 +00:00)You can observe the underfitting and overfitting behaviors of the model and decide how to handle these situations by tuning the hiper parameters.

In this tutorial, I will not go into the details of hiper-parameter tuning, underfitting, and overfitting concepts. However, in PART K: HYPERPARAMETER OPTIMIZATION (TUNING), UNDERFITTING, AND OVERFITTING, we will see these concepts in detail.

Save the trained model

tf.keras.models.save_model(model_Conv1D, 'MultiClassTextClassification_Conv1D')WARNING:absl:Found untraced functions such as _jit_compiled_convolution_op, _jit_compiled_convolution_op, _jit_compiled_convolution_op while saving (showing 3 of 3). These functions will not be directly callable after loading.

time: 1.28 s (started: 2022-12-21 14:37:38 +00:00)

Test

loss, accuracy = model_Conv1D.evaluate(test_ds)

print("Test accuracy: ", accuracy)1319/1319 [==============================] - 3s 3ms/step - loss: 1.1513 - sparse_categorical_accuracy: 0.8191

Test accuracy: 0.819110095500946

time: 5.11 s (started: 2022-12-21 14:37:39 +00:00)

Predictions

We can use the trained model predict() method to predict the class of the given reviews as follows:

preds = model_Conv1D.predict(test_ds)

preds = preds.argmax(axis=1)1319/1319 [==============================] - 3s 2ms/step

time: 2.88 s (started: 2022-12-21 14:37:44 +00:00)

We can also get the actual (true) class of the given reviews as follows:

actuals = test_ds.unbatch().map(lambda x,y: y)

actuals=list(actuals.as_numpy_iterator())time: 20.5 s (started: 2022-12-21 14:37:47 +00:00)

By comparing the preds and the actuals values, we can measure the model performance as below.

Classification Report

Since we are dealing with multi-class text classification, it is a good idea to generate a classification report to observe the performance of the model for each class. We can use the SKLearn classification_report() method to build a text report showing the main classification metrics.

The report is the summary of the precision, recall, and F1 score for each class.

The reported averages include:

- macro average (averaging the unweighted mean per label),

- weighted average (averaging the support-weighted mean per label),

- sample average (only for multilabel classification),

- micro average (averaging the total true positives, false negatives, and false positives) is only shown for multi-label or multi-class with a subset of classes because it corresponds to accuracy otherwise and would be the same for all metrics.

from sklearn import metrics

print(metrics.classification_report(actuals, preds, digits=4))precision recall f1-score support

0 0.6443 0.7909 0.7101 2707

1 0.9437 0.8596 0.8997 2458

2 0.7554 0.7372 0.7462 2732

3 0.8599 0.7742 0.8148 2560

4 0.8141 0.8829 0.8471 2768

5 0.9764 0.8851 0.9285 2619

6 0.8535 0.8106 0.8315 2724

7 0.4385 0.6983 0.5387 2380

8 0.9545 0.9198 0.9368 2757

9 0.7872 0.8174 0.8020 2235

10 0.9562 0.9262 0.9410 2736

11 0.8360 0.6517 0.7324 2604

12 0.8562 0.8284 0.8421 2687

13 0.6075 0.8149 0.6961 2264

14 0.9501 0.8871 0.9175 2683

15 0.4797 0.6404 0.5486 2681

16 0.9802 0.8780 0.9263 2770

17 0.9763 0.9692 0.9727 2725

18 0.8615 0.7594 0.8072 2506

19 0.6838 0.8376 0.7529 2716

20 0.9137 0.8895 0.9015 2751

21 0.9412 0.6968 0.8008 2734

22 0.8395 0.8729 0.8559 2660

23 0.9447 0.8569 0.8986 2571

24 0.7855 0.8451 0.8142 2751

25 0.7534 0.6649 0.7064 2647

26 0.8792 0.8351 0.8566 2693

27 0.9080 0.9602 0.9334 2662

28 0.9553 0.8024 0.8722 2662

29 0.8688 0.8561 0.8624 2592

30 0.8094 0.6423 0.7162 2625

31 0.9092 0.8864 0.8977 2756

accuracy 0.8191 84416

macro avg 0.8351 0.8181 0.8221 84416

weighted avg 0.8376 0.8191 0.8241 84416

time: 203 ms (started: 2022-12-21 14:38:07 +00:00)

In multi-class classification, you need to be careful with the number of samples in each class (support value in the above table). If there is an imbalance among the classes you need to apply some actions.

Moreover, observe the precision, recall, and F1 scores of each class and compare them with the average values of these metrics.

If you would like to learn more about these metrics and how to handle imbalance datasets, please refer to the following tutorials on the Murat Karakaya Akademi YouTube channel :)

In English:

- Multi-Class Text Classification with GPT3 Transformer: Preprocessing Metrics Cross Validation

- Model Evaluation & Performance Metrics Tutorial: Explained with Examples, Pros & Cons

- How To Evaluate Classifiers with Imbalanced Dataset Part A Fundamentals, Metrics, Synthetic Dataset

In Turkish:

- Dengesiz Veri Kümeleri ile Sınıflandırmada Başarım Ölçütleri: 3. Bölüm ROC, AUC, Threshold Ayarlama

- Dengesiz Veri Kümeleri ile Sınıflandırmada Başarım Ölçütleri: 2. Bölüm Sınıflandırıcıların Başarımı

- Dengesiz Veri Kümeleri ile Sınıflandırmada Başarım Ölçütleri: 1. Bölüm Temel Bilgiler, Metrikler

- Çok-Etiketli Sınıflandırmada Başarım Ölçümü: ROC & AUC ölçütleri 2/3

- Çok-Etiketli Sınıflandırmada Başarım Ölçümü: Precision Recall F1 ölçütleri 1/3

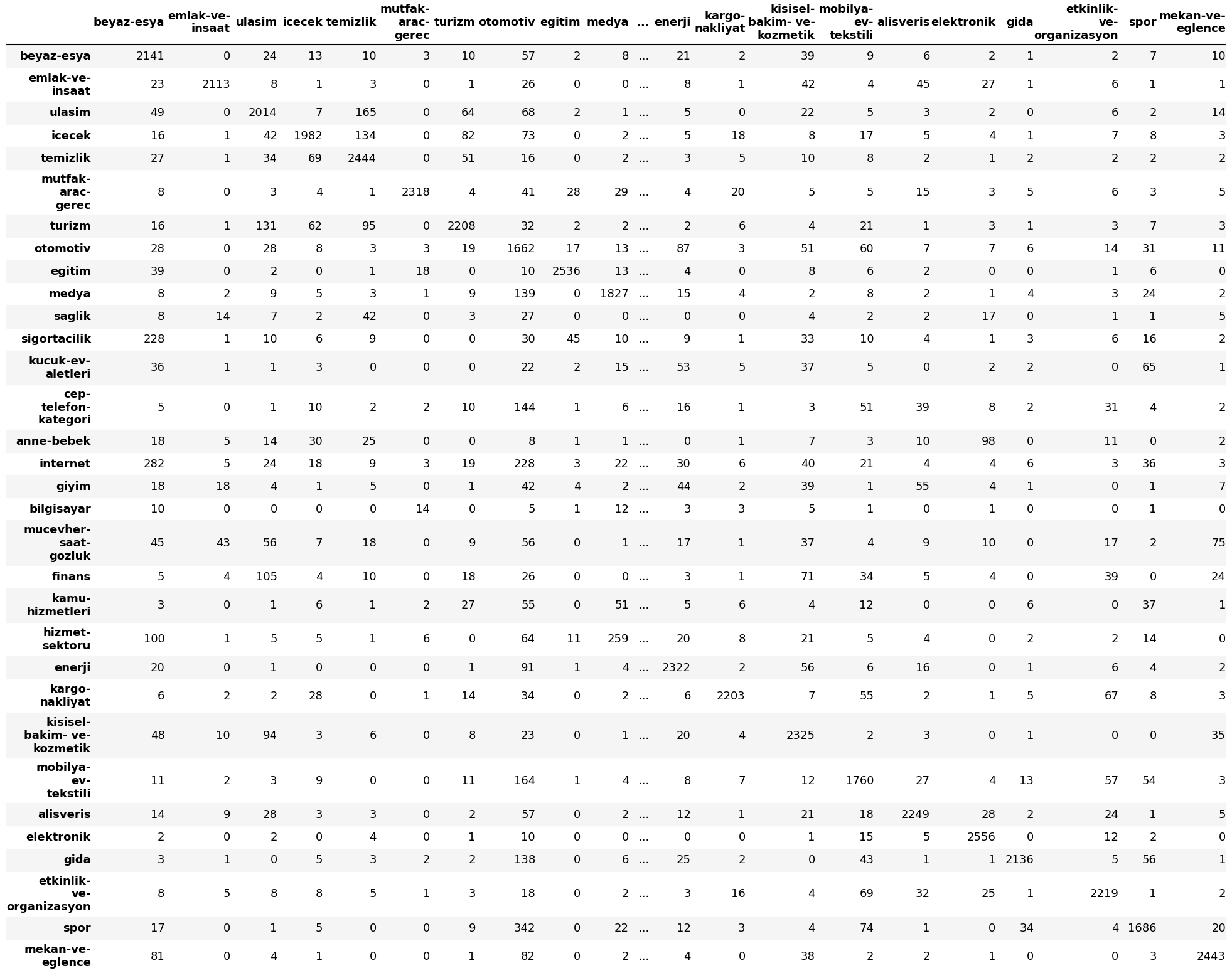

Confusion Matrix

A confusion Matrix is used to know the performance of a Machine learning model at classification. The results are presented in a matrix form. The confusion Matrix gives a comparison between Actual and Predicted values. The numbers on the diagonal are the number of the correct predictions.

from sklearn.metrics import confusion_matrix

# Creating a confusion matrix,

# which compares the y_test and y_pred

cm = confusion_matrix(actuals, preds)

cm_df = pd.DataFrame(cm, index = id_to_category.values() ,

columns = id_to_category.values())time: 35.8 ms (started: 2022-12-21 14:38:08 +00:00)

Below, you can observe the distribution of predictions over the classes.

cm_df

time: 20.8 ms (started: 2022-12-21 14:38:08 +00:00)We can also visualize the confusion matrix as the ratios of the predictions over the classes. The ratios on the diagonal are the ratios of the correct predictions.

import seaborn as sns

import matplotlib.pyplot as plt

plt.figure(figsize=(22,22))

ax = sns.heatmap(cm_df/np.sum(cm_df), annot=True, fmt='.1%', cmap='Blues')

ax.set_title('Confusion Matrix\n\n');

ax.set_xlabel('\nPredicted')

ax.set_ylabel('Actual');

ax.xaxis.set_ticklabels(id_to_category.values())

ax.yaxis.set_ticklabels(id_to_category.values())

## Display the visualization of the Confusion Matrix.

plt.show()

time: 3.03 s (started: 2022-12-21 14:38:08 +00:00)Please, observe the correctly classified sample ratios of each class.

You may need to take some actions for some classes if the correctly predicted sample ratios are relatively low.

Create an End-to-End model

In the code above, we applied the TextVectorization layer to the dataset before feeding text to the model. If you want to make your model capable of processing raw strings (for example, to simplify deploying it), you can include the TextVectorization layer inside your model. You can call this model an End-to-End model.

To do so, you can create a new model using the TextVectorization layer (vectorize_layer) we just trained as the first layer.

end_to_end_model = tf.keras.Sequential([

vectorize_layer,

model_Conv1D,

layers.Activation('softmax')

])

end_to_end_model.compile(

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False),

optimizer="adam", metrics=['accuracy']

)time: 13.7 ms (started: 2022-12-21 14:38:11 +00:00)

Notice that end_to_end_model generates the exact test accuracy using the raw text with the original model accepting preprocessed text.

loss, accuracy = end_to_end_model.evaluate(test_features, test_targets)

print("Test accuracy: ",accuracy)2640/2640 [==============================] - 14s 5ms/step - loss: 1.1514 - accuracy: 0.8191

Test accuracy: 0.8190795183181763

time: 13.8 s (started: 2022-12-21 14:38:11 +00:00)end_to_end_model.summary()Model: "sequential_4"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

text_vectorization (TextVec (None, 40) 0

torization)

model_Conv1D (Functional) (None, 32) 2008160

activation_3 (Activation) (None, 32) 0

=================================================================

Total params: 2,008,160

Trainable params: 2,008,160

Non-trainable params: 0

_________________________________________________________________

time: 34.2 ms (started: 2022-12-21 14:38:25 +00:00)

Let’s observe the prediction of end_to_end_model with the raw text:

examples = [

"Bir fenomen aracılığı ile Yalova Terma City otel'den arandık. Tatil kazandınız buyurun 16 ocakta gelin dediler. Gittik (2 küçük çocuk, eşim ve annem ), bizi y** adlı kişi karşıladı. Tanıtım yapacağız 4 saat dedi. Daha odamıza bile geçemeden, dinlemeye fırsat vermeden bize oteli gezdirmeye başladılar. Gürültülü, müzik sesli, havasız, kalabalık (Corona olduğu dönemde) bir salonda bize tapulu 1 haftalık arsa sattılar. (psikolojik baskı ile) Tabi o yorgunlukla (amaçları da bu zaten) dinlenmeden bize otelin her detayını anlattılar. Tapumuzu almadan para istediler, güvendik aldık. IBAN numarası otele ait olmayan şahsa 30 bin tl ödedik. 1 gün sonra tapu işlemleri yapılacaktı istemiyoruz tapu, tatil dedik. Kabul etmiyorlar, paramızı vermiyorlar. Ayrıca annemin kaldığı odada ısıtma sistemi çalışmıyordu, çocuk havuzu aşırı pisti, kadınlara ait termal havuz kapalı idi, odada telefon çalışmıyordu ya da bilerek sessize alıyorlar ilgilenmemek için.",

"5 yıl kullandığım buzdolabım buzluktan şu akıtmaya başladı. Servis geldi içini boşaltın. Lastiklerinden hava alıyor sıcak suyla lastikleri yıkayın dediler. Denileni yaptım. 1 sene olmadan tekrar akıtmaya başladı",

"Hepsiburada'dan esofman takimi aldık. 18 ocakta yola çıktı ve teslim edildi gözüküyor. Teslim adresi kayınpederimin dükkandı. Ben elemanlar aldı diye düşündüm. Fakat birkaç gün geçti getiren olmadı. Sorunca da kimsenin teslim almadığını öğrendim. Lütfen kargomuzu kime teslim ettiğinizi öğrenin, o gün dağıtım yapan kuryenize sorabilirsiniz. Gereğinin yapılacağını umuyorum, kızıma aldığım bir hediyeydi üzgünüm.",

"Bimcell tarafıma mesaj atıp 6GB internet Hediye! Evet yazıp 3121'e göndererek kampanyaya katilin,3 gün içinde 30 TL'ye Dost Orta Paket almanız karşılığında haftalık 6GB cepten internet kazanın! Şeklinde mesaj attı dediklerini yerine getirdim paketi yaptım 3121 e Evet yazarak mesaj attım ancak 24.01.2022 de yaptığım işlem hala gerçekleşmedi hediye 6 GB hattıma tanımlanmadı",

"Instagram'da gözlük marketi hesabı sattığı kalitesiz ürünü geri almıyor. Gözlük çok kötü. Saplar oyuncak desen değil. Oyuncakçıdan alsam çok daha kaliteli olurdu. Bir yazdım iade edebilirsiniz diyor. Sonra yok efendim iademiz yok diyor.",

"Tamamlayıcı sağlık sigortamı iptal etmek istiyorum fakat ne bankadan ne NN SİGORTA'dan bir tek muhatap bile bulamıyorum. Telefonda dakikalarca tuşlama yapıp bekletiliyor kimsenin cevap verdiği yok. Zaman kaybından başka bir şey değil! İletişim kurabileceğim biri tarafından aranmak istiyorum",

"Selamlar TOKİ ve emlak yönetimden şikayetimiz var. Kastamonu merkez örencik TOKİ 316 konut 3 gündür kömür gelmedi bir çok blokta kömür bitmiş durumda bu kış zamanında eksi 8 ila 15 derecede yaşlılar hastalar çocuklar bütün herkesi mağdur ettiler. Emlak yönetim 734.60 ton kömür anlaşması yapmış onu da geç yaptığı için zaten yüksek maliyet çıkarmıştı yeni fiyat güncellemesi yapacakmış örneğin bana 6.160 TL nin üzerine fiyat eklenecekmiş bu işi yapan sorumlu kişi veya kişilerin zamanında tedbir almamasının cezasını TOKİ de oturan insanlar çekiyor ya sistem ya da kişiler hatalı"

]

predictions=end_to_end_model.predict(examples)

for pred in predictions:

print(id_to_category[np.argmax(pred)])1/1 [==============================] - 0s 245ms/step

emlak-ve-insaat

internet

internet

kucuk-ev-aletleri

elektronik

elektronik

emlak-ve-insaat

time: 286 ms (started: 2022-12-21 14:38:25 +00:00)

Summary

In this part,

- we created a Deep Learning model with a 1 Dimensional Convolutional layer (Conv1D) to classify text into multi-classes,

- we trained and tested the model,

- we observed the model performance using the Classification Report and Confusion Matrix,

- we pointed out the importance of the balanced dataset and the performance of each class.

- we created an end-to-end model by integrating the Keras Text Vectorization layer into the final model.

In the next part, we will design another Deep Learning model by using a recurrent layer (LSTM).

Thank you for your attention!

NEXT PARTS

In this tutorial series, there are several parts to cover the Text Classification with Deep Learning Models topic. You can access all the parts from this index page.

FULL CODE LINKS:

You can access the complete codes as Colab Notebooks here or using the links given in each video description or you can visit the Murat Karakaya Akademi Github Repo.

YOUTUBE VIDEOS LINKS:

You can watch all these parts on the Murat Karakaya Akademi YouTube channel in ENGLISH or TURKISH.

Comments or Questions?

Please share your Comments or Questions.

Thank you in advance.

Do not forget to check out the next parts!

Take care!