The Depths of Large Language Models (LLM): A Comprehensive Guide from Architecture to a Billion-Dollar Market

Hello, dear Murat Karakaya Akademi followers!

Today, we're embarking on a deep dive into the most transformative technology of the last few years: Large Language Models (LLMs). This technology entered our lives when ChatGPT reached 100 million active users in the incredible span of just two months, and since then, it has become central to both the tech world and our daily lives. So, how did these models become so intelligent? How are they fundamentally changing the worlds of business and science? And more importantly, how can we use this power safely and responsibly?

In this article, we will seek the answers to these questions using all the details from the provided presentation. Our goal is to offer practical insights to all stakeholders—from executives to developers, from academics to technology enthusiasts—by demonstrating the potential and architecture of LLMs with numbers and evidence.

If you're ready, let's dive into the fascinating world of Large Language Models!

Why Are Large Language Models So Important? A Panoramic View with Numbers

One of the best ways to understand the importance of a technology is to see its impact through concrete data. When it comes to LLMs, the figures are truly staggering. Let's look together at the striking evidence behind this "AI explosion."

The data below summarizes the situation across four main axes:

-

Incredible Speed of Adoption: According to Reuters, ChatGPT became the "fastest-growing application" in internet history by reaching 100 million monthly active users in just two months. This milestone previously took phenomena like Instagram and TikTok years to achieve. This shows just how intuitive and rapidly adaptable LLM-based applications are for the masses.

-

Deep Integration in the Corporate World: This technology hasn't just become popular among end-users. A global survey for 2025 by McKinsey & Company reveals that over 75% of companies are already using Generative AI in at least one business function.

From generating marketing copy to writing software code, from customer service to financial analysis, LLMs are actively creating value in countless fields. -

Massive Market Size and Capital Flow: The numbers also highlight the economic potential of this field. According to Grand View Research, the Generative AI market is expected to reach a value of $17.109 billion in 2024 and grow with a compound annual growth rate (CAGR) of approximately 30% until 2030.

Investors, aware of this potential, are not standing still. According to CB Insights data, a remarkable 37% of venture capital (VC) funds in 2024 went directly to AI startups. This is the clearest indicator that innovation and new LLM-based solutions will continue to accelerate. -

A Breakthrough in Scientific Productivity: One of the most exciting impacts of LLMs is being felt in the world of science. A study published on arXiv, which analyzed 67.9 million articles, found that researchers using AI tools publish 67% more papers and receive a full 3.16 times more citations.

This proves that LLMs are not just summarizing existing information but are acting as a catalyst that accelerates the scientific discovery process, from hypothesis generation to data analysis.

In summary: The picture before us clearly shows that LLMs are not a passing fad; on the contrary, they represent a fundamental technological transformation, much like the invention of the internet or the mobile revolution.

The Architecture, Capabilities, and Reasoning Power of LLMs: How Did They Get So Smart?

So, what lies behind these models' impressive capabilities? The answer is hidden in the revolutionary leaps their architecture has taken in recent years. The tables on pages 5 and 6 of our presentation provide an excellent roadmap for understanding this evolution.

Architectural Leaps and Key Concepts

While older language models were simpler and more rule-based, the Transformer Architecture, introduced in 2017, changed everything. However, the real "intelligence" boost came from innovative layers built on top of this fundamental architecture.

Today's most powerful models (GPT-4.1, Llama 4 Scout, Gemini 1.5 Pro, GPT-4o) share some common architectural features:

-

Sparse Mixture-of-Experts (MoE): This is perhaps the most significant architectural innovation. A traditional model uses a single, massive neural network to solve a task. MoE changes this approach. It divides the model into smaller "expert" networks, each specializing in specific topics. A "router" layer analyzes the incoming data and directs the task to the expert or experts it believes can best solve it.

- How to Apply: This architecture makes models much more efficient to both train and run. By activating only the relevant experts instead of the entire massive network, it reduces computational costs. For example, GPT-4.1 is noted to have approximately 16 experts.

This allows the model to be both faster and more capable. Figures 1 does a fantastic job of visually explaining the difference between a standard Transformer block and an MoE block. In the figures, you can see how the "Router" layer in the MoE architecture distributes the incoming task to different experts.

Multimodality: Early language models could only understand and generate text. The modern models, however, can process multiple data types simultaneously, including text, images, audio, and even video.

For example, Gemini 1.5 Pro's support for multimodality, including video, makes it possible to show it a movie trailer and ask for a summary or have it write the code for a graphic design. -

Massive Context Window: The context window indicates how much information a model can hold in its memory at one time. While early models struggled to remember a few pages of text, the 10 million token context window of Meta's Llama 4 Scout model means it can analyze almost an entire library at once.

This is a critical ability for the model to make connections in very long documents or complex codebases, maintain consistency, and perform deep reasoning.

The Frontiers of Reasoning: The Latest "Reasoning" Models and Common Formulas for Success

LLMs don't just store information; they can also "reason" about complex problems. The common denominators behind the success of these models are:

-

MoE + Retrieval: The MoE architecture mentioned above is often combined with a technique known as Retrieval-Augmented Generation (RAG). RAG allows the model to go beyond its internal knowledge and "retrieve" relevant information from up-to-date and reliable databases or documents before answering a question. This helps the model provide more accurate and current answers and reduces its tendency to "hallucinate," or invent information.

-

Chain-of-Thought (CoT) and Plan-and-Execute: This involves the model explaining its thought process step-by-step when answering a question. The model breaks down a complex problem into smaller, manageable steps. "Plan-and-Execute" takes this a step further: the model first creates a solution plan, then executes this plan step-by-step, checking itself at each stage. This significantly increases success in tasks requiring multi-step logic, such as mathematics and coding.

-

Guard-Rails: The responsible use of these powerful models is vital. "Guard-Rails" are filters and control mechanisms designed to prevent the model from generating harmful, unethical, or dangerous content.

Practical Tip: If you are using an LLM in your own projects, look not only at the model's power but also at whether it supports these advanced reasoning and safety techniques. RAG and Guard-Rail capabilities are essential, especially if you are developing an enterprise solution.

The Power of Large Language Models in Numbers: Benchmark Tests and the IQ Metaphor

We've understood the architecture of the models, but how can we objectively measure their performance? This is where benchmarks come into play.

What is the MMLU Benchmark?

Page 13 of our presentation gives us detailed information about one of the most respected tests in the industry, MMLU (Massive Multitask Language Understanding).

- Definition: Introduced by OpenAI in 2021, MMLU is a comprehensive test that measures the general knowledge and reasoning skills of language models.

- Scope: It covers 57 different fields, including STEM (science, technology, engineering, mathematics), social sciences, humanities, and professional topics like law.

The questions range from middle school to graduate-level difficulty. - Goal: The test aims to assess the model's ability to reason and solve problems using its knowledge across different disciplines, not just its memorized information.

- Human Performance: In this test, the average performance of a human expert in the field is considered to be around 89%.

This gives us an important reference point for comparing the performance of the models.

Comparing Reasoning Power

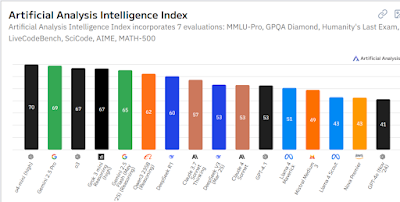

The Artificial Analysis Intelligence Index graph at Figure 2 showcases the performance of current models on these challenging tests. The graph shows that in tests like GPQA Diamond and AIME, which contain competition questions considered superhumanly difficult, the scores of models like OpenAI's o3 and xAI's Grok 3 are pushing or surpassing the upper limits of the expert-human band.

An IQ Metaphor: Just How "Smart" is AI?

An interesting metaphor is used to make these model performance scores more understandable: the IQ test. The analysis on page 15 of the presentation offers a striking perspective on this. According to this analysis, an average human's performance of 34% on MMLU is roughly considered equivalent to an IQ score of 100.

- GPT-4.1 → IQ ≈ 260

- Gemini 2.5 Pro → IQ ≈ 248

- Grok 3 β → IQ ≈ 235

Important Note: Of course, this is a metaphor. LLMs do not possess conscious or emotional intelligence like humans. This "IQ" score is merely an attempt to place their problem-solving abilities on specific cognitive tasks onto a scale comparable to humans. Nevertheless, this comparison is a powerful tool for understanding the level of competence these models have reached. The graph on page 16 of the presentation, Figure 7, which shows various models on an IQ distribution curve, visually summarizes this situation.

Conclusion, Recommendations, and a Look to the Future

As we come to the end of this deep dive, the conclusions we've reached are quite clear. As emphasized on the closing page of the presentation: "LLMs provide a striking lever for creating business value; however, the simultaneous risk curve is also climbing rapidly."

This is a double-edged sword that, on one hand, offers unprecedented opportunities in efficiency, innovation, and scientific discovery, and on the other, carries serious risks such as misinformation, security vulnerabilities, and ethical issues.

So, what should we do?

- For Executives and Leaders: Rather than seeing LLMs as a "magic wand," approach them as a strategic tool. Identify the biggest inefficiencies or most valuable opportunities in your organization and test LLMs with small, controllable pilot projects focused on these areas.

- For Developers and Engineers: Go beyond just using APIs. Try to understand the underlying architectures and techniques like MoE, RAG, and CoT. This will not only enable you to build better applications but also give you the ability to understand the models' limitations and potential weaknesses. Place security (Guard-Rails) and responsible AI principles at the forefront of your projects.

- For All Tech Enthusiasts: Continue to follow the developments in this field. Learn, experiment, and question. This technology will shape every aspect of our lives over the next decade, and being a part of this transformation will be critical for both your personal and professional growth.

I'm curious about your thoughts on this exciting and complex topic. What do you think? In which areas do you foresee LLMs having the biggest impact on our lives in the future? Share with us in the comments!

If you found this detailed analysis helpful and want to see more in-depth content on topics like artificial intelligence and data science, don't forget to subscribe to the Murat Karakaya Akademi YouTube channel! Your support inspires us to produce more high-quality content.