tf.data: Build Efficient TensorFlow Input Pipelines for Image Datasets

1. Download Sample Data from a Web Site

I selected the dataset Image Data for Multi-Instance Multi-Label Learning

Download the dataset using wget

!wget https://www.dropbox.com/s/0htmeoie69q650p/miml_dataset.zipUnzip it

!unzip -o -q miml_dataset.zipTake a look at the folders and files.

ls miml_dataset/mimages/ miml_labels_1.csv miml_labels_2.csv

So we have an images folder and 2 label data files.

2. Open the label data file first

df=pd.read_csv("./miml_dataset/miml_labels_1.csv")

df.head()

Record the label names

LABELS=["desert", "mountains", "sea", "sunset", "trees"]3. Build Image File List Dataset

Now we can gather the image file names and paths by traversing the images/ folders. There are two options to load file list from image directory using tf.data.Dataset module as follows.

3.1. Create the file list dataset by Dataset.from_tensor_slices()

We can use Path and glob methods in pathlib module for browsing the image folder and compiling all the image file names and paths.

data_dir = pathlib.Path("miml_dataset")

filenames = list(data_dir.glob('images/*.jpg'))

print("image count: ", len(filenames))

print("first image: ", str(filenames[0]) )

PIL.Image.open(str(filenames[0]))image count: 2000

first image: miml_dataset/images/1516.jpg

We can create a list of image file paths

fnames=[]

for fname in filenames:

fnames.append(str(fname))

fnames[:5]['miml_dataset/images/1516.jpg',

'miml_dataset/images/1938.jpg',

'miml_dataset/images/927.jpg',

'miml_dataset/images/1868.jpg',

'miml_dataset/images/533.jpg']

- In most cases, we would like to test the input pipeline with a small portion of the data to ensure the performance and accuracy of the tf.data pipeline design.

- Here, we select a very small set of images and observe the effects of the map, prefect, cache, and batch methods.

- We can supply the image file list to

tf.data.Dataset.from_tensor_slices()for creating a dataset of file paths.

ds_size= len(fnames)

print("Number of images in folders: ", ds_size)

number_of_selected_samples=10

filelist_ds = tf.data.Dataset.from_tensor_slices(fnames[:number_of_selected_samples])

ds_size= filelist_ds.cardinality().numpy()

print("Number of selected samples for dataset: ", ds_size)Number of images in folders: 2000

Number of selected samples for dataset: 10

3.2. Create the file list dataset by Dataset.list_files()

As stated in the tf.data.Dataset documentation:

list_files(file_pattern, shuffle=None, seed=None)is used for creating a dataset of all files matching one or more glob patterns.- The

file_patternargument should be a small number of glob patterns. - If your filenames have already been globbed*, use

Dataset.from_tensor_slices(filenames)instead, as re-globbing every filename with list_files may result in poor performance with remote storage systems."

Since, we have already globbed the file names & paths above, we will not use the below approach.

However, I would like to show you how to do it:

- First, provide the image folder

data_dir = pathlib.Path("miml_dataset")- Then, supply the glob patterns to the

list_files()method filelist_ds =tf.data.Dataset.list_files(str(data_dir/'*/*'))

In this method, we can not specify the number of selected samples.

4. Prepare Auxiliary Functions

4.1 First check if the filelist_ds is created as expected by getting some samples

print("Number of files in the dataset: ", filelist_ds.cardinality().numpy())

print("3 samples:")

for a in filelist_ds.take(3):

fname= a.numpy().decode("utf-8")

print(fname)

display(PIL.Image.open(fname))Number of files in the dataset: 10

3 samples:

miml_dataset/images/1516.jpg

miml_dataset/images/1938.jpg

miml_dataset/images/927.jpg

4.2 Get the label for the given image file name

Remember that we have read the label info into a pandas DF

df.head()

We can code a simple function get_label(file_path) to match the file name with the corresponding one-hot encoded labels as below:

def get_label(file_path):

print("get_label acivated...")

parts = tf.strings.split(file_path, '/')

file_name= parts[-1]

labels= df[df["Filenames"]==file_name][LABELS].to_numpy().squeeze()

return tf.convert_to_tensor(labels)Let’s check the function:

for a in filelist_ds.take(5):

print("file_name: ", a.numpy().decode("utf-8"))

print(get_label(a).numpy())file_name: miml_dataset/images/1516.jpg

get_label acivated...

[0 1 0 1 0]

file_name: miml_dataset/images/1938.jpg

get_label acivated...

[0 0 0 0 1]

file_name: miml_dataset/images/927.jpg

get_label acivated...

[0 0 1 0 1]

file_name: miml_dataset/images/1868.jpg

get_label acivated...

[0 0 0 0 1]

file_name: miml_dataset/images/533.jpg

get_label acivated...

[0 1 0 0 0]

4.3 Process the image

Let’s process (resize, scale, etc.) the images so that we can save time in training in the process_img() function

IMG_WIDTH, IMG_HEIGHT = 32 , 32

def process_img(img):

print("process_img acivated...")

#color images

img = tf.image.decode_jpeg(img, channels=3)

#convert unit8 tensor to floats in the [0,1]range

img = tf.image.convert_image_dtype(img, tf.float32)

#resize

return tf.image.resize(img, [IMG_WIDTH, IMG_HEIGHT])4.4. Combine the label and image

We use the combine_images_labels() function:

- to upload the image data from the given file path

- to process the image

- to get the one-hot encoded labels

- to combine image data (X_train) with label (y_train) to create the train_ds

def combine_images_labels(file_path: tf.Tensor):

print("combine_images_labels acivated...")

img = tf.io.read_file(file_path)

print("image file read...")

img = process_img(img)

label = get_label(file_path)

return img, label5. Decide Split Ratio

We can decide the size of train and test datasets and split the file names accordingly to them

train_ratio = 0.80

ds_train=filelist_ds.take(ds_size*train_ratio)

ds_test=filelist_ds.skip(ds_size*train_ratio)

print("train size: ", ds_train.cardinality().numpy())

print("test size: ", ds_test.cardinality().numpy())train size: 8

test size: 2

6. Build the train and test datasets

In training and test datasets, we will have:

- image data

- label data

So far, we have only the file paths and names stored in these datasets.

ds_train.element_specTensorSpec(shape=(), dtype=tf.string, name=None)

Notice that we have only a single tensor of the file path (tf.string).

6.1. map() method

Maps map_func across the elements of this dataset.

We will use map method for calling the lambda function to consume each file path and name and return us (image, label) pairs.

As stated in the official documentation:

“To use Python code inside of the function you have a few options:

- Rely on AutoGraph to convert Python code into an equivalent graph computation. The downside of this approach is that AutoGraph can convert some but not all Python code.

- Use tf.py_function, which allows you to write arbitrary Python code but will generally result in worse performance than 1).”

Here, I use tf.py_function because, unfortunately, AutoGraph did not work on the combine_images_labels() function properly.

ds_train=ds_train.map(lambda x: tf.py_function(func=combine_images_labels,

inp=[x], Tout=(tf.float32,tf.int64)),

num_parallel_calls=tf.data.AUTOTUNE,

deterministic=False)

ds_train.prefetch(ds_size-ds_size*train_ratio)<PrefetchDataset shapes: (<unknown>, <unknown>), types: (tf.float32, tf.int64)>

6.2. Improve map() performance

Furthermore, as noted in the official documentation:

“Performance can often be improved by setting num_parallel_calls so that map will use multiple threads to process elements. If deterministic order isn't required, it can also improve performance to set deterministic=False."

Since reading files from a hard disk take much time, we apply these suggested methods.

ds_test= ds_test.map(lambda x: tf.py_function(func=combine_images_labels,

inp=[x], Tout=(tf.float32,tf.int64)),

num_parallel_calls=tf.data.AUTOTUNE,

deterministic=False)

ds_test.prefetch(ds_size-ds_size*train_ratio)<PrefetchDataset shapes: (<unknown>, <unknown>), types: (tf.float32, tf.int64)>

We can check the element spec of the resulting datasets:

ds_train.element_spec(TensorSpec(shape=<unknown>, dtype=tf.float32, name=None),

TensorSpec(shape=<unknown>, dtype=tf.int64, name=None))

Notice that we now have a tensor tuple of images (tf.float32) and one-hot encoded label (tf.int64).

6.3. Observe some samples

Let’s look into the dataset elements:

for image, label in ds_train.take(1):

print("Image shape: ", image.shape)

print("Label: ", label.numpy())combine_images_labels acivated...

image file read...

process_img acivated...

get_label acivated...

combine_images_labels acivated...

image file read...

process_img acivated...

get_label acivated...

Image shape: combine_images_labels acivated...

(32, 32, 3)

image file read...

process_img acivated...

Label: [0 1 0 1 0]

get_label acivated...

Please note that: the tf.data pipeline works by executing all the functions we coded so far!

def covert_onehot_string_labels(label_string,label_onehot):

labels=[]

for i, label in enumerate(label_string):

if label_onehot[i]:

labels.append(label)

if len(labels)==0:

labels.append("NONE")

return labels

covert_onehot_string_labels(LABELS,[0,1,1,0,1])['mountains', 'sea', 'trees']

def show_samples(dataset):

fig=plt.figure(figsize=(16, 16))

columns = 3

rows = 3

print(columns*rows,"samples from the dataset")

i=1

for a,b in dataset.take(columns*rows):

fig.add_subplot(rows, columns, i)

plt.imshow(np.squeeze(a))

plt.title("image shape:"+ str(a.shape)+" ("+str(b.numpy()) +") "+

str(covert_onehot_string_labels(LABELS,b.numpy())))

i=i+1

plt.show()

show_samples(ds_train)9 samples from the dataset

combine_images_labels acivated...

image file read...

process_img acivated...

combine_images_labels acivated...

image file read...

process_img acivated...get_label acivated...

get_label acivated...

combine_images_labels acivated...

image file read...

process_img acivated...

get_label acivated...

combine_images_labels acivated...

image file read...

process_img acivated...

get_label acivated...

combine_images_labels acivated...

image file read...

process_img acivated...

get_label acivated...

combine_images_labels acivated...

image file read...

process_img acivated...

get_label acivated...

combine_images_labels acivated...

image file read...

process_img acivated...

get_label acivated...

combine_images_labels acivated...

image file read...

process_img acivated...

get_label acivated...

print("Number of samples in train: ", ds_train.cardinality().numpy(),

" in test: ",ds_test.cardinality().numpy())Number of samples in train: 8 in test: 2

7. Configure Datasets for Training & Testing

7.1. Decide Batch Size

BATCH_SIZE=47.2 Configure for performance

As discussed in Better performance with the tf.data API, there are several ways to increase the performance of input data pipelines in tf.data.

By increasing the performance of the input pipeline, we can decrease the overall train and test processing time.

The general strategy is to overlap the input pipeline steps (reading file paths, uploading and processing image data, filtering label info, converting them to image data and label tuples, etc.) with batch computation during the train or test phase.

Here, I summarize the most related methods very shortly:

- Prefetching: Prefetching overlaps the preprocessing and model execution of a training step.

- Caching: The

tf.data.Dataset.cachetransformation can cache a dataset, either in memory or on local storage. This will save some operations (like file opening and data reading) from being executed during each epoch. - Batching: Combines consecutive elements of this dataset into batches.

The exact order of these transformations depends on several factors. For more details please refer to Better performance with the tf.data API

Below, I will apply these transformations step by step in different orders

ds_train_batched=ds_train.batch(BATCH_SIZE).prefetch(tf.data.experimental.AUTOTUNE).cache()

ds_test_batched=ds_test.batch(BATCH_SIZE).prefetch(tf.data.experimental.AUTOTUNE).cache()

print("Number of batches in train: ", ds_train_batched.cardinality().numpy())

print("Number of batches in test: ", ds_test_batched.cardinality().numpy())Number of batches in train: 2

Number of batches in test: 1

8.Use the Datasets in Train and Test

8.1. Create a CNN model

To train fast, let’s use Transfer Learning by importing VGG16

base_model = keras.applications.VGG16(

weights='imagenet', # Load weights pre-trained on ImageNet.

input_shape=(32, 32, 3), # VGG16 expects min 32 x 32

include_top=False) # Do not include the ImageNet classifier at the top.

base_model.trainable = FalseCreate a simple classification model by adding the necessary layer to the base VGG16

number_of_classes = 5time: 1.22 ms (started: 2021-01-01 19:58:50 +00:00)inputs = keras.Input(shape=(32, 32, 3))

x = base_model(inputs, training=False)

x = keras.layers.GlobalAveragePooling2D()(x)

initializer = tf.keras.initializers.GlorotUniform(seed=42)

activation = tf.keras.activations.softmax #None # tf.keras.activations.sigmoid or softmax

outputs = keras.layers.Dense(number_of_classes,

kernel_initializer=initializer,

activation=activation)(x)

model = keras.Model(inputs, outputs)

8.2. Compile the model

model.compile(optimizer=keras.optimizers.Adam(),

loss=keras.losses.CategoricalCrossentropy(), # default from_logits=False

metrics=[keras.metrics.CategoricalAccuracy()])8.3. Set up Tensorboard to analyze the model and input pipeline

You can use the input-pipeline analysis or tf_data_bottleneck_analysis in the PROFILE\Tools tab.

For the details of these analyses please refere to Optimize TensorFlow performance using the Profiler

%tensorboard --logdir logs/

8.4. Train the model

model.fit(ds_train_batched, epochs=2, callbacks=[tensorboard_callback])Epoch 1/2

combine_images_labels acivated...

combine_images_labels acivated...

image file read...

process_img acivated...

image file read...

process_img acivated...

get_label acivated...

get_label acivated...

combine_images_labels acivated...

image file read...

process_img acivated...

get_label acivated...

combine_images_labels acivated...

image file read...

process_img acivated...

get_label acivated...

combine_images_labels acivated...

image file read...

process_img acivated...

get_label acivated...

combine_images_labels acivated...

image file read...

process_img acivated...

get_label acivated...

combine_images_labels acivated...

image file read...

process_img acivated...

get_label acivated...

1/2 [==============>...............] - ETA: 3s - loss: 2.9083 - categorical_accuracy: 0.2500

image file read...

process_img acivated...

get_label acivated...

2/2 [==============================] - 5s 1s/step - loss: 2.6927 - categorical_accuracy: 0.1667

Epoch 2/2

2/2 [==============================] - 0s 62ms/step - loss: 2.5507 - categorical_accuracy: 0.2500

8.5 Observations for the different input data pipeline designs

ds_train_batched=ds_train_batched.cache().prefetch(tf.data.experimental.AUTOTUNE)

During the first epoch, the input data pipeline executes all the steps (get a label, process image, combine, etc).

However, after the first epochs, the input data pipeline DOES NOT have to execute all the steps because we already cache the datasets thanks to cache()

ds_train_batched=ds_train_batched

Now, we can try without cache() transformation in the input data pipeline, and observe that at each epoch, the input data pipeline will re-run whole functions which will be too time-consuming

ds_train_batched=ds_train_batched.prefetch(tf.data.experimental.AUTOTUNE)

If we use prefetch but not cache, you will observe that the input data pipeline will prepare all the batches before the training epochs start.

- Notice that the total training time and each epoch time vary much according to the designed input data pipeline.

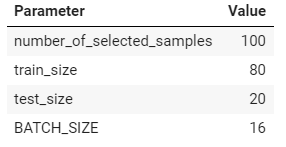

Some experiments with a small portion of data as the hyperparameter values are as follows:

Observed durations (reported by TF)

9. Conclusion

The Best practices for designing performant TensorFlow input pipelines can be summarized below:

- Use the prefetch transformation to overlap the work of a producer and consumer.

- Parallelize the data reading transformation using the interleave transformation.

- Parallelize the map transformation by setting the num_parallel_calls argument.

- Use the cache transformation to cache data in memory during the first epoch

- Vectorize user-defined functions passed into the map transformation

- Reduce memory usage when applying the interleave, prefetch, and shuffle transformations.

As a very quick recipe, I might suggest using the following input pipelines:

batch(BATCHSIZE).cache().prefetch(tf.data.experimental.AUTOTUNE)

However, there are several factors affecting the performance of the designed input pipeline.

Therefore, please read Better performance with the tf.data API for more details.

References

tf.data: Build TensorFlow input pipelines

Better performance with the tf.data API

Analyze tf.data performance with the TF Profiler